Computational Infrastructures

Posted by <Anthony Discenza> on 2022-10-15

Over the past year and a half, I’ve been experimenting extensively with various text-to-image generators, including Dream, NeuralBlender, Midjourney, Dall-E 2, and Stable Diffusion. As an artist whose work frequently explores the tensions between textual and visual forms of representation, it’s felt necessary to me to investigate the use of technology that so directly (and seemingly effortlessly) sutures the two together.

While the arrival of these generative image models has been met with overheated techno-positivism on one hand and open hostility on the other, the reality is that these tools are not going away—and in fact are already becoming rapidly entrenched across multiple industries as they increase in sophistication. What is required, then, is to think through the far-reaching implications of these machine-learning technologies in a deliberative manner, and to use them in the service of this inquiry by considering the ways in which they are both similar to and radically different from existing visual technologies.

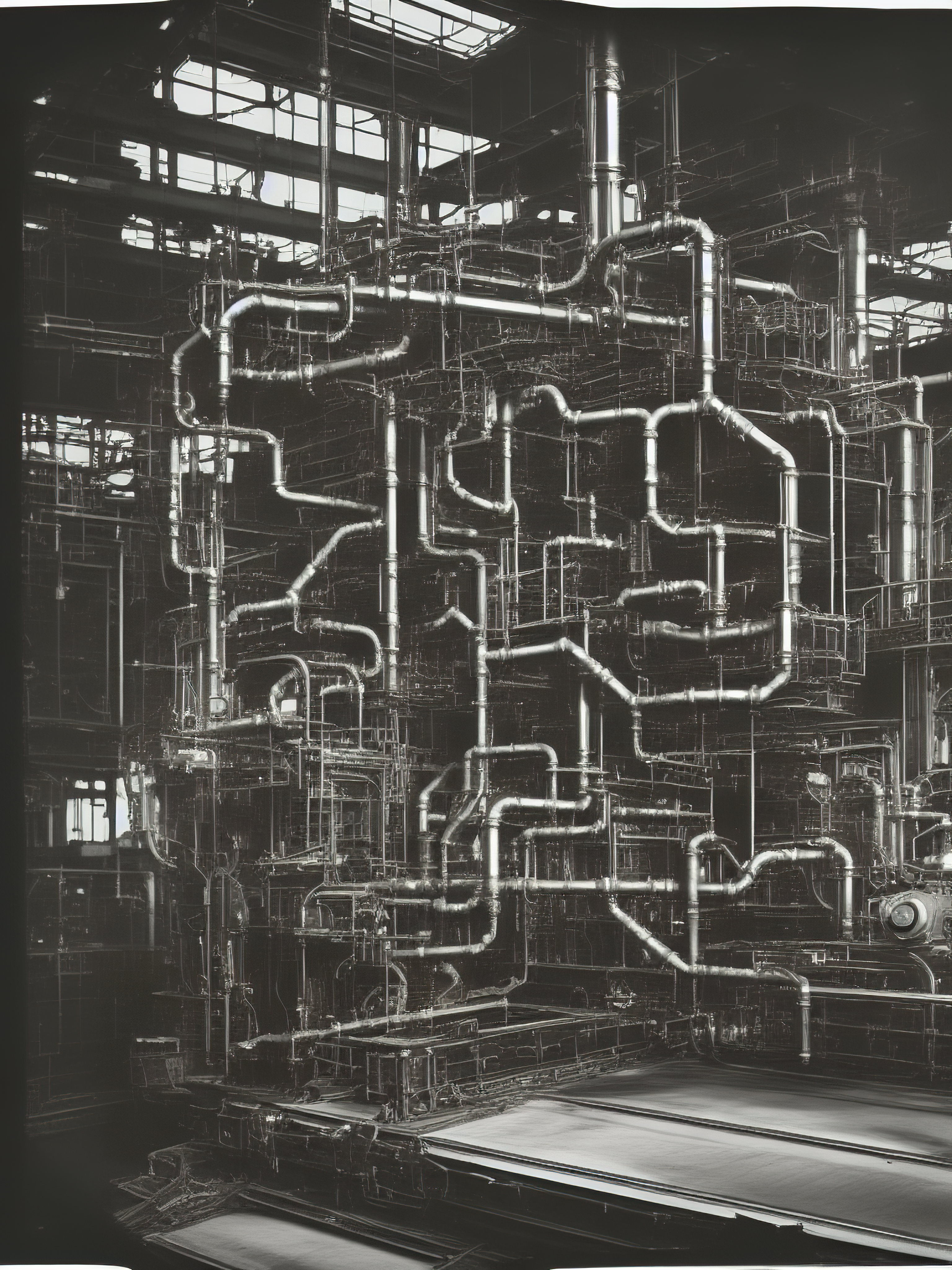

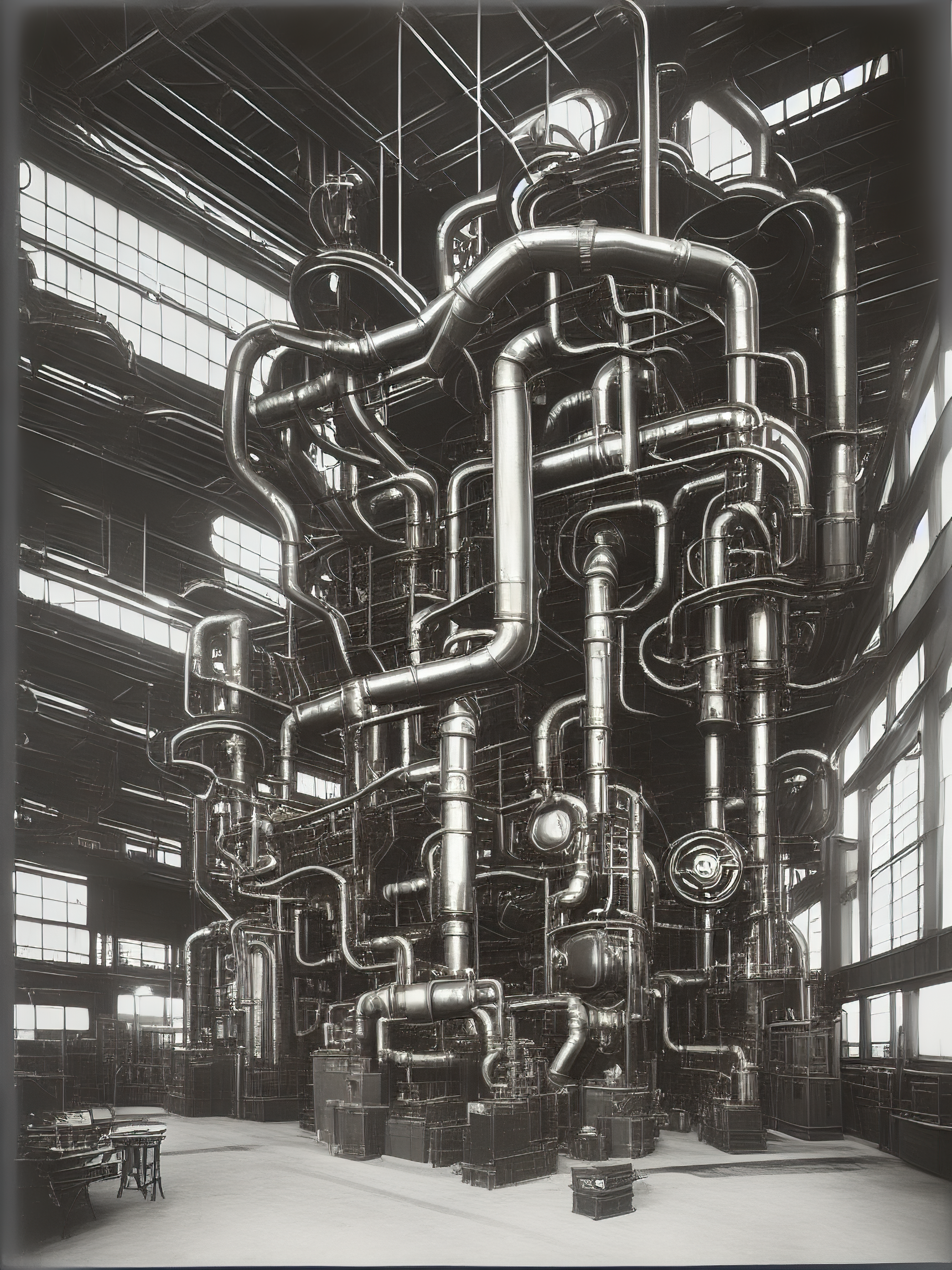

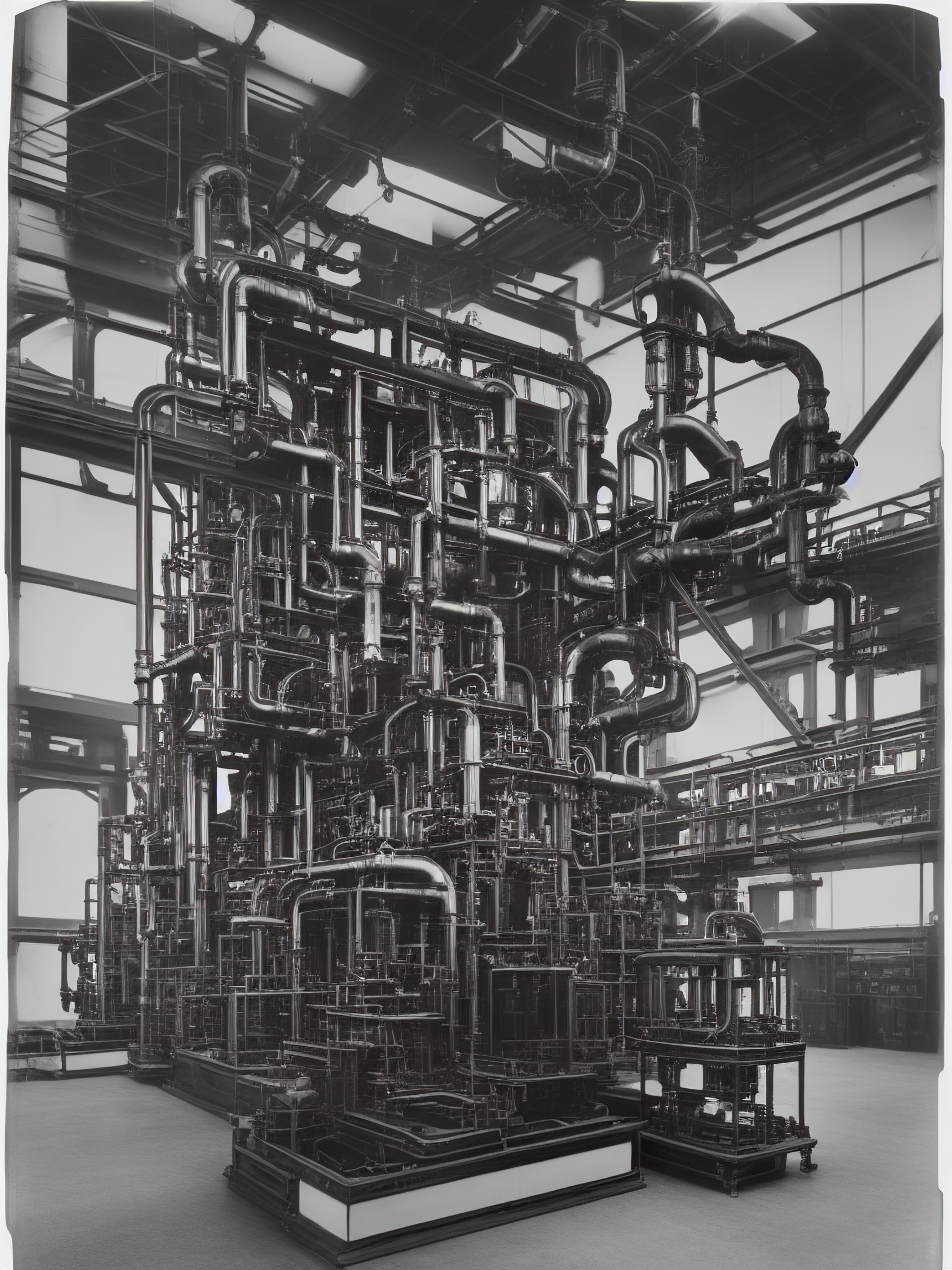

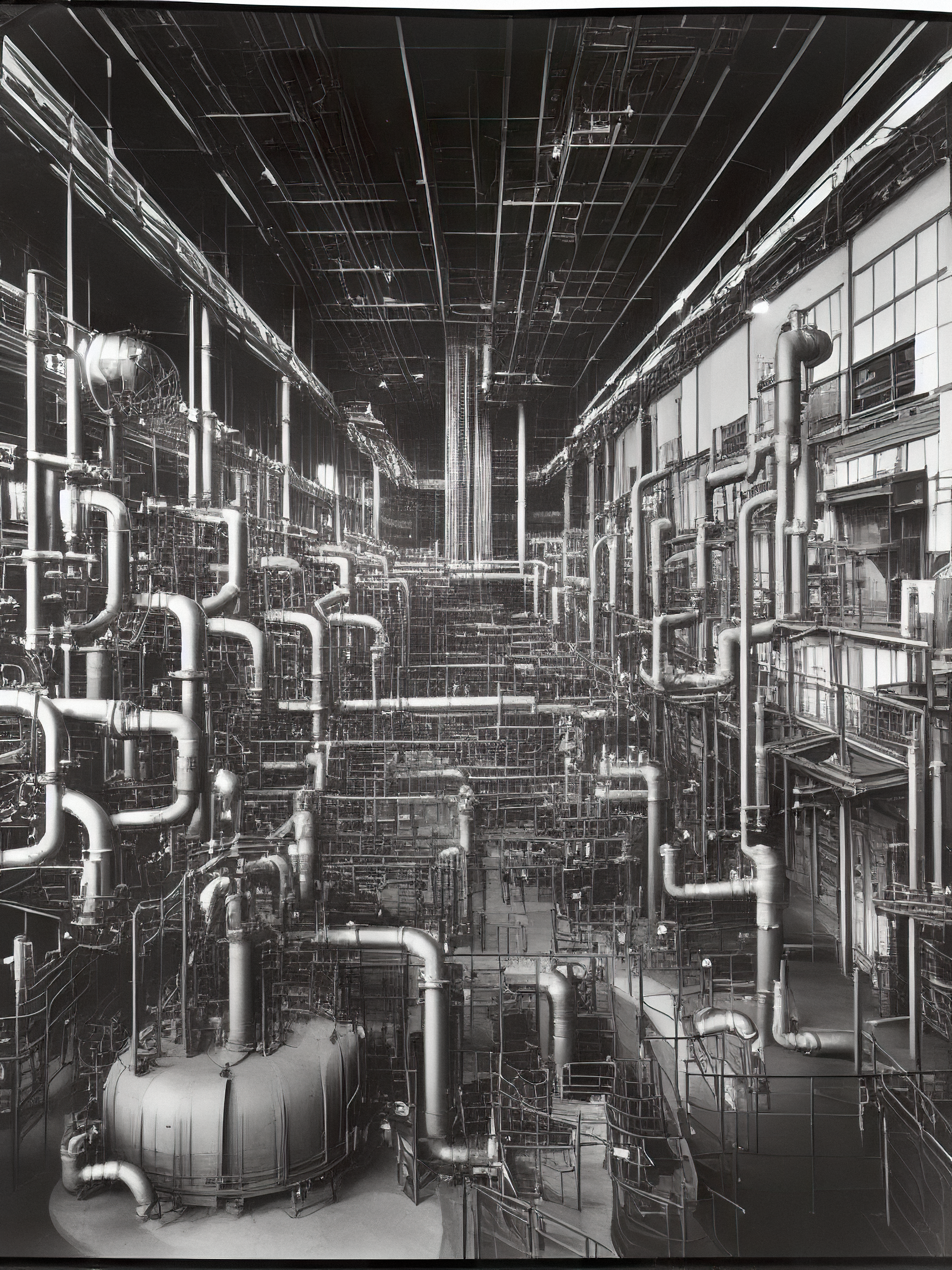

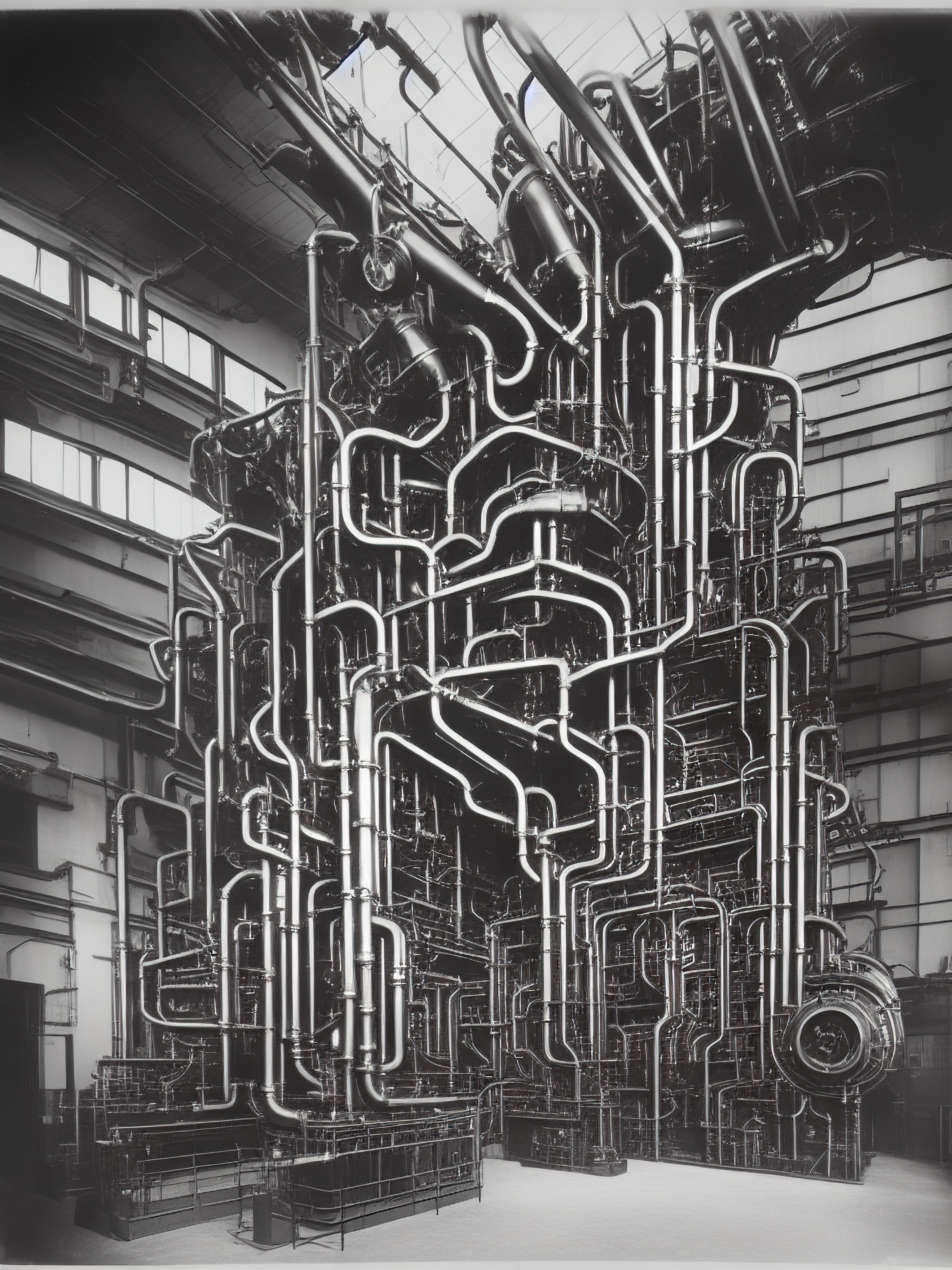

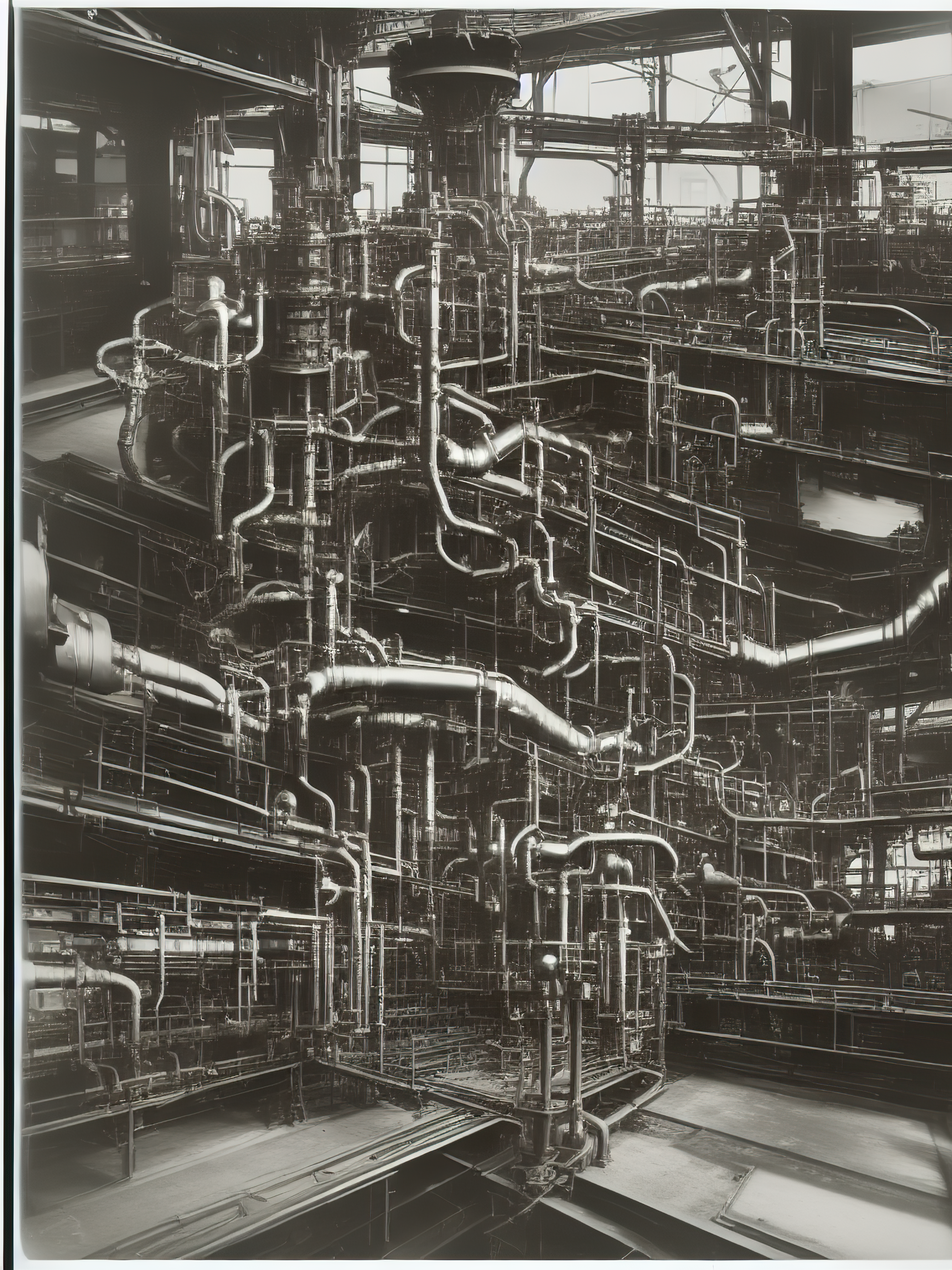

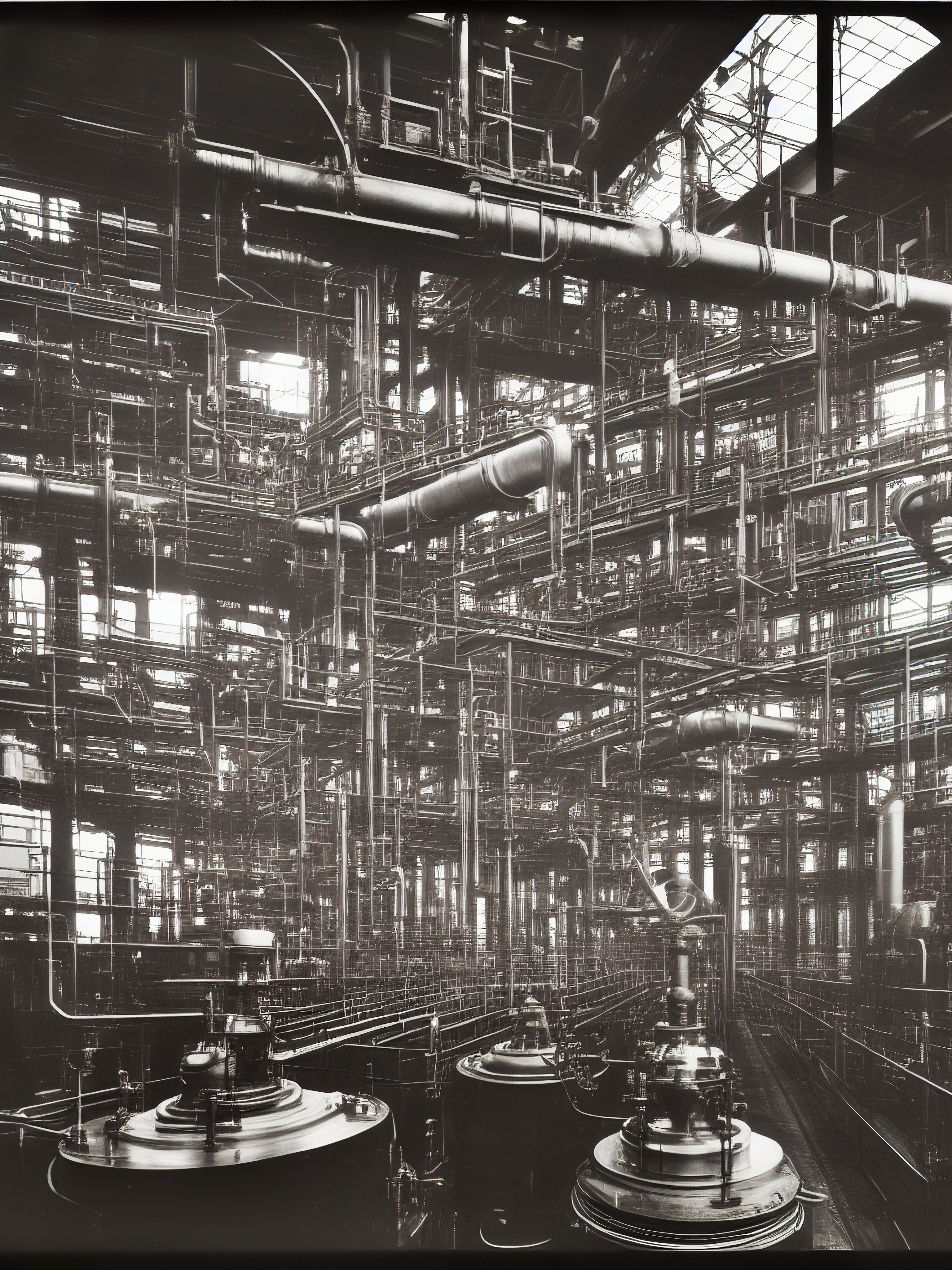

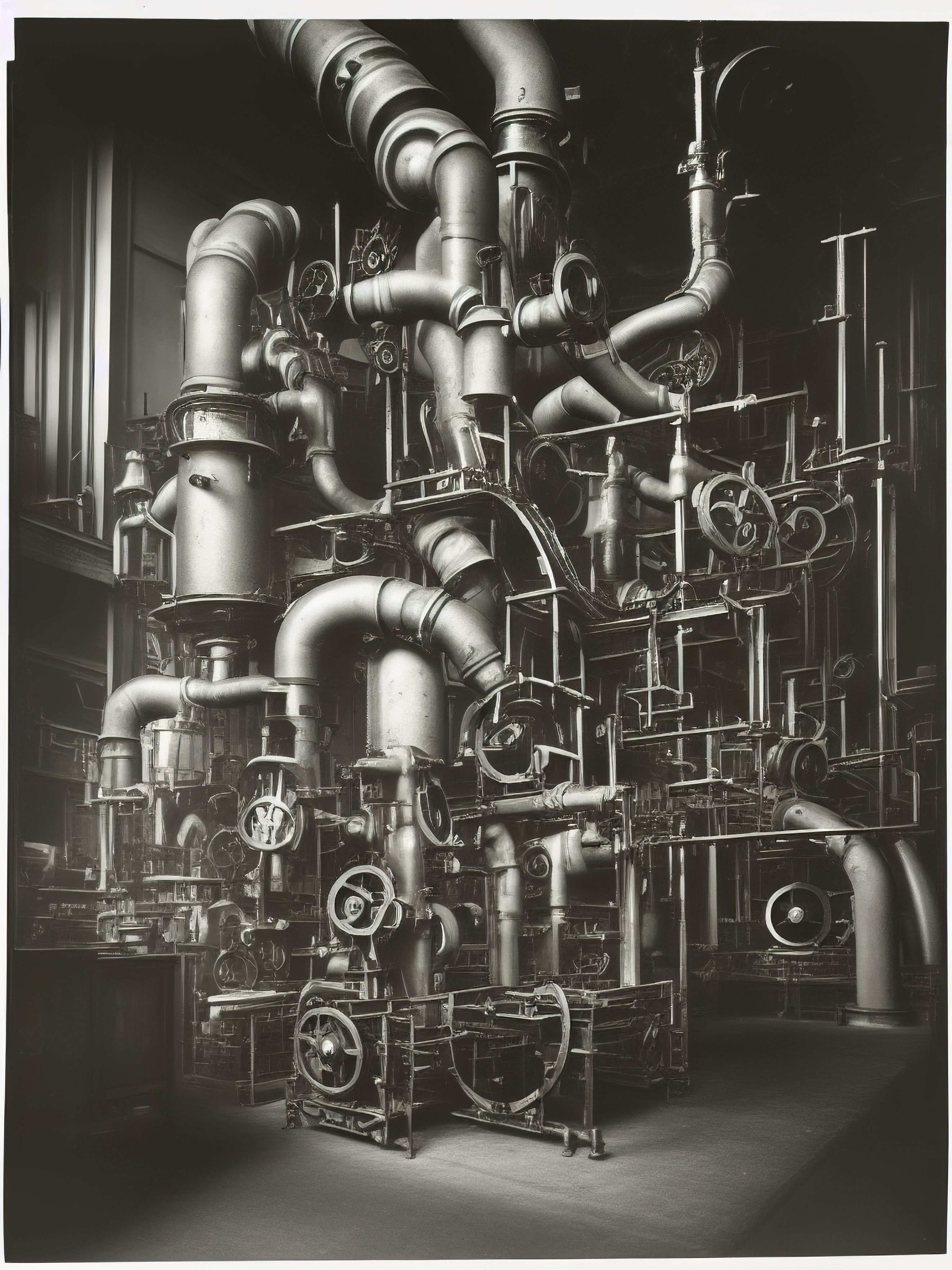

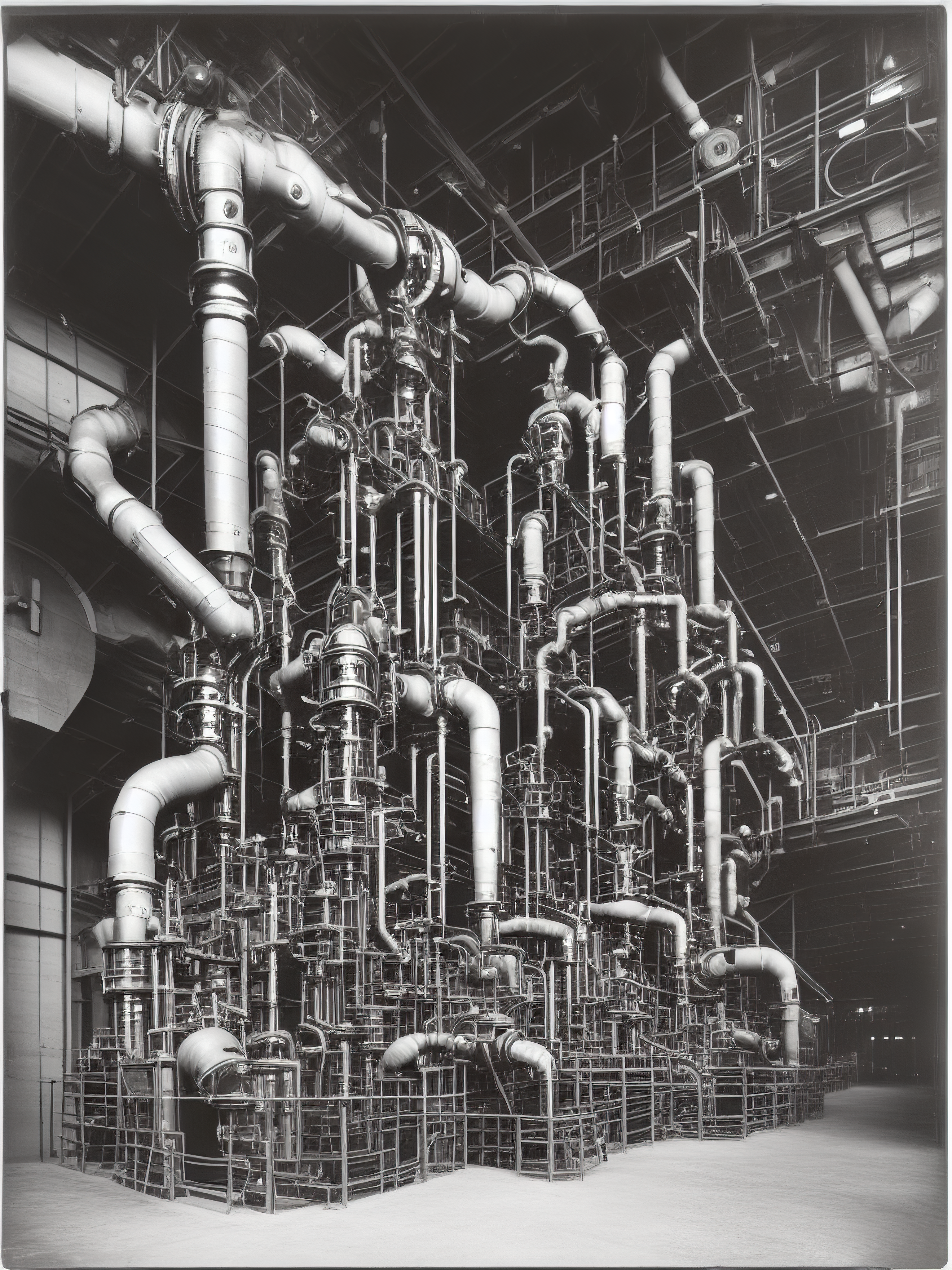

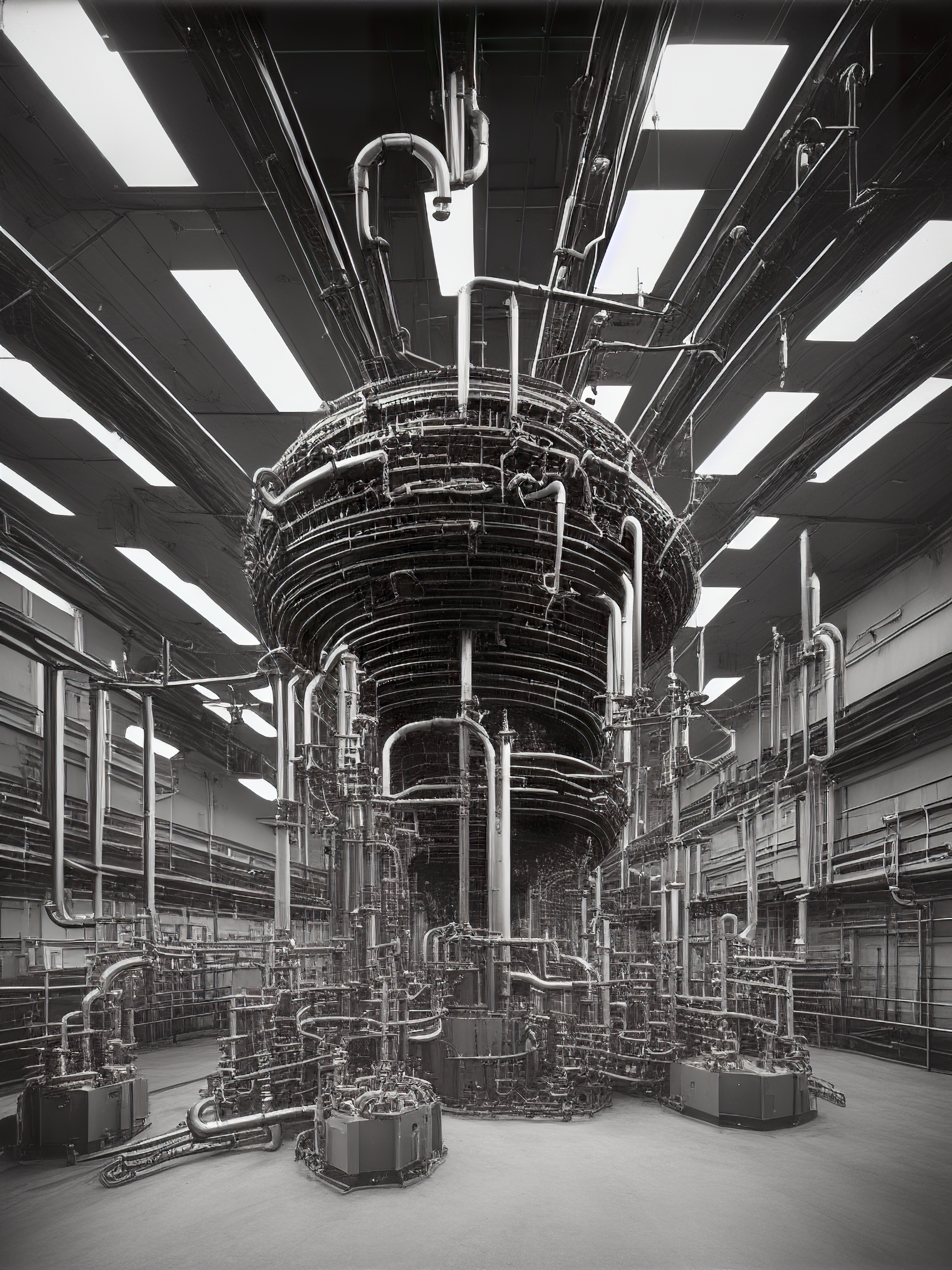

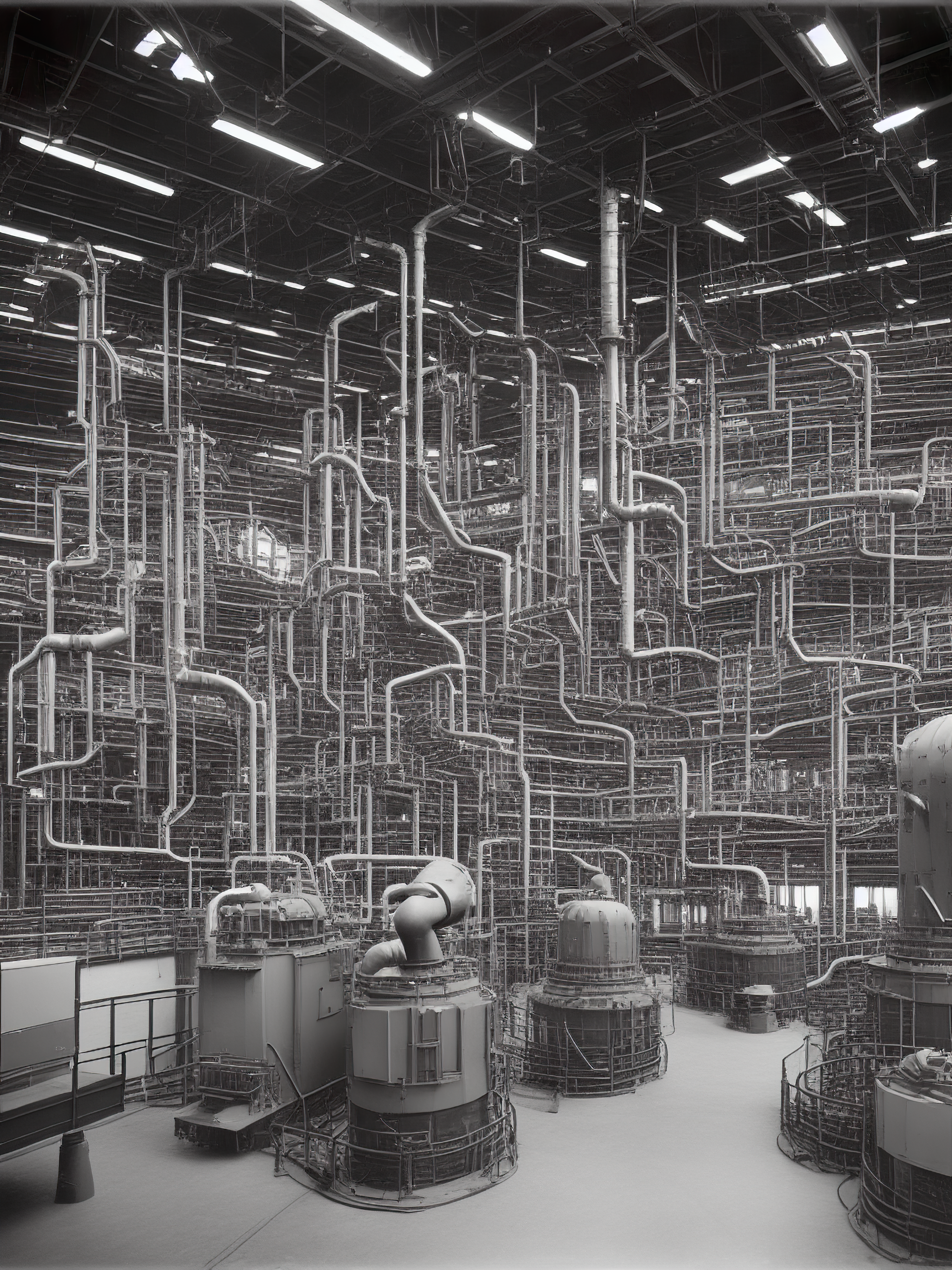

This collection of images, part of a larger series, arises from meditations on early forms of mechanical image reproduction (such as the photogravure process), as well as the massive physical infrastructures that our ever-increasing demand for computation entails. Over the past decade or so, there has been a pervasive trend throughout various computing industries to employ the language of dematerialization when describing their operations, and this tendency has diffused into the culture at large. Yet there is nothing immaterial at all about “the cloud,” which in reality consists of hundreds of thousands of servers stacked in countless massive physical structures, each consuming huge amounts of energy; interconnected globally by a web of power plants, transmission lines, undersea cables, phones, laptops, VR headsets, and other interfaces that are produced via extractive systems with detrimental costs to humans and the environment alike. The language of dematerialization seeks to sweep consideration of these costs under the rug by encouraging us to visualize computing technologies as ephemeral or mystical forces associated with the processes of nature itself.

Because these image generators rely not only on this globally distributed infrastructure, but on vast datasets that are built from the labor of millions of individuals uploading billions of images to the web, it seemed interesting to prompt these systems to visualize computational space as physical infrastructure—in particular, the messy, extractive infrastructures associated with industries like energy production. The prompts also ask the model to render these images in the visual vernacular of early industrial photography, in part to reflect upon another relatively recent machine-based imaging technology—one whose advent, like today’s generative models, was also to have far-reaching implications for the ways we produce and consume images.

Note: aside from upscaling, the images (output by the Stable Diffusion model) have not been modified in any way. Inpainting, outpainting, and image-to-image tools were not used in the generation of the images.